1. Download vmware and install it successfully;

2. Install the CentOS system in vmware;

Second, configure the JAVA environment in the virtual machine1. Install java virtual machine (jdk-6u31-linux-i586.bin);

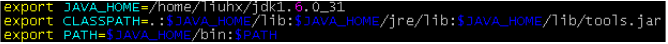

2. Configure environment variables

(1) vi /etc/profile (edit file)

(2) Add

(3) source /etc/profile (inject environment variables)

Note: use ROOT and households

Third, modify the hostsVim /etc/hosts modified to: 127.0.0.1 qiangjin

Note: use ROOT and households

Fourth, modify the hostname vim /etc/sysconfig/networkModified to: NETWORKING=yes HOSTNAME=qiangjin

Temporarily modify the hostname, use

Hostname qiangjin View current hostname, use

Hostname Note: Use ROOT and household

Five, configure ssh1. Execute in the current user home directory

(1) ssh-keygen

(2)cat .ssh/id_rsa.pub 》 .ssh/authorized_keys

(3) chmod 700 .ssh

(4) chmod 600 .ssh/authorized_keys

(5) After successful ssh qiangjin, it should be:

1. Unpack hadoop-0.20.2-cdh3u3.tar.gz;

2. Unzip hbase-0.90.4-cdh3u3.tar.gz;

3. Unzip hive-0.7.1-cdh3u3.tar.gz;

4. Unzip zookeeper-3.3.4-cdh3u3.tar.gz;

5. Unzip sqoop-1.3.0-cdh3u3.tar.gz;

6. Unzip mahout-0.5-cdh3u3.tar.gz; (for data mining algorithms)

Note: tar –xvf xxxx.tar.gz

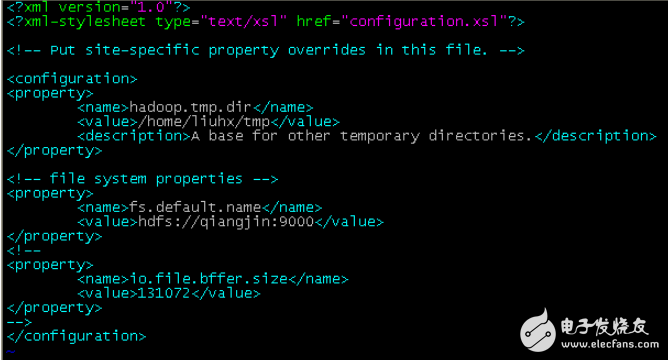

Seven, modify the hadoop configuration file(1) Enter cdh3/hadoop-0.20.2-cdh3u3/conf

(2) modification

Core-site.xml

Note: The hostname is used in the fs.default.name configuration;

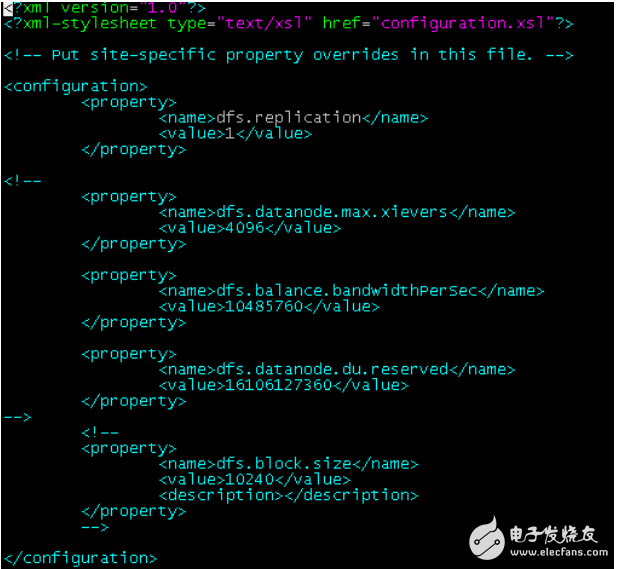

(3) Modify hdfs-site.xml

Note: When using a single machine, generally set dfs.replicaTIon to 1

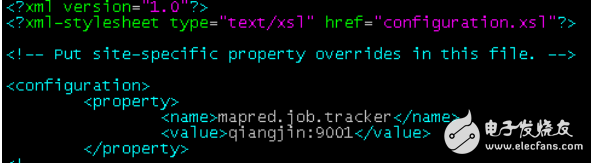

(4) Modification

Mapred-site.xml

Note: mapred.job.tracker uses its own hostname;

(5) Modification

Masters

(6) Modification

Slaves Â

(7) Modification

Hadoop-env.sh

Need to add environment variables

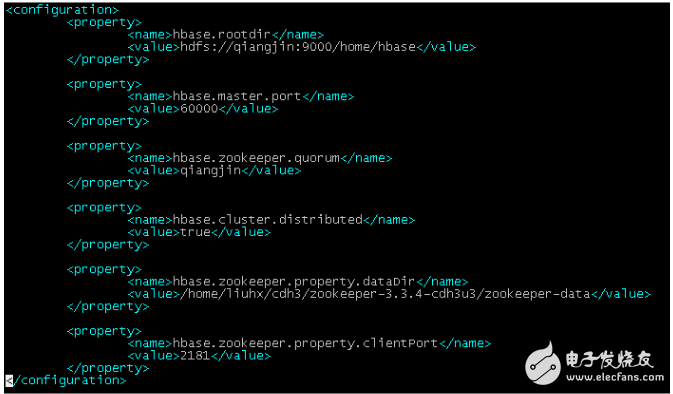

Eight, modify the HBase configuration(1) Enter cdh3/hbase-0.90.4-cdh3u3/conf

(2) Modify hbase-site.xml

Figure 7

(3) modification

Regionserver

(4) Modification

Hbase-env.sh

Need to add environment variables

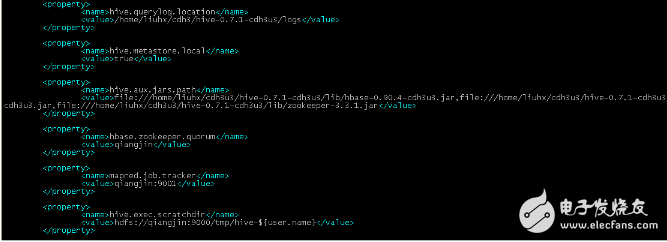

Nine, modify the hive configuration(1) Enter cdh3/hive-0.7.1-cdh3u3/conf

(2) Add hive-site.xml and configure

Note: Note that hbase.zookeeper.quorum, mapred.job.tracker, hive.exec.scratchdir, javax.jdo.opTIon.ConnecTIonURL, javax.jdo.opTIon.ConnectionUserName, javax.jdo.option.ConnectionPassword need to be added to the environment. variable

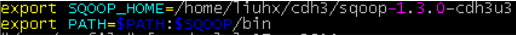

Ten, modify the sqoop configurationNeed to add environment variables

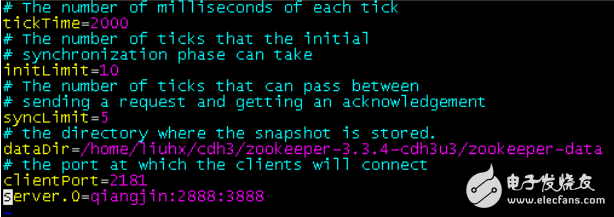

(1) Enter cdh3/zookeeper-3.3.4-cdh3u3

(2) New directory zookeeper-data

(3) Enter zookeeper-data and create new myid, fill in 0

(4) Enter cdh3/zookeeper-3.3.4-cdh3u3/conf

(5) Modification

Zoo.cfg

Note: The configuration of dataDir and server.0;

Need to add environment variables

Twelve, modify the mahout configuration needs to add environment variables thirteen, database JAR package(1) Put mysql-connector-java-5.1.6.jar into cdh3/hive-0.7.1-cdh3u3/lib

(2) Put ojdbc14.jar into cdh3/sqoop-1.3.0-cdh3u3/lib

Fourteen, hadoop first format and start, stop1.hadoop format hadoop namenode -format

2.hadoop start-all.sh

3.hadoop stop stop-all.sh

Note: Use jps or ps to check if hadoop is started. If there is a problem during startup, it will be displayed on the screen. You can enter the URL: http://qiangjin:50070 View the operation of hadoop

Fifteen, start hbase(1) start

Hbase, the command is as follows: start-hbase.sh (2) stop

Hbase, the command is as follows: stop-hbase.sh (3) enter hbase

Shell, the command is as follows hbase shell

(4) View the table in hbase, the command is as follows (need to enter the hbase shell) list

(5) Note: Hadoop is required to be started.

Note: Hadoop is required to be in startup. You can enter the URL: http://qiangjin:60010

View the operation of hbase 16. Start zookeeper

(1) Start zookeeper, the command is as follows zkServer.sh start

(2) Stop zookeeper, the command is as follows zkServer.sh stop

Note: If it is a stand-alone situation, the startup of hbase will drive the start of zookeeper;

Seventeen, start hive(1) Start hive, the command is hive as follows

(2) View the table, the command is as follows: (must be executed under the hive command window) show tables;

Eighteen, run the wordcount instance(1) Create file01 and file02, and set the content;

(2) Create an input directory in hdfs: Hadoop fs –mkdir input

(3) will file01 and file02

Copy to hdfs hadoop fs -copyFromLocal file0* input

(4) Execute wordcount hadoop jar hadoop-examples-0.20.2-cdh3u3.jar wordcount input output

(5) View the results of hadoop fs -cat output/part -r -00000

(1) Enter cdh3/sqoop-1.3.0-cdh3u3/bin

(2) New directory importdata

(3) Enter the directory importdata

(4) New sh file

Oracle-test.sh

(5) Execution. /oracle-test.sh

(6) Enter hive to check whether the import is successful.

Note: The parameters used by hive import. ./sqoop import --append --connect $CONNECTURL --username $ORACLENAME --password $ORACLEPASSWORD --m 1 --table $oracleTableName --columns $columns --hive-import

Twenty, import oracle data into hbase(1) Enter cdh3/sqoop-1.3.0-cdh3u3/bin

(2) New directory importdata

(3) Enter the directory importdata

(4) New sh file

Oracle-hbase.sh

(5) Execution. /oracle-hbase.sh

(6) Enter the hbase shell to check whether the import is successful.

Note: The parameters used by hbase import. ./sqoop import --append --connect $CONNECTURL --username $ORACLENAME --password $ORACLEPASSWORD --m 1 --table $oracleTableName --columns $columns -hbase-create-table --hbase-table $hbaseTableName - -hbase-row-key ID --column-family cf1

Twenty-one, configure hbase to hive mapping(1) Enter cdh3/hive-0.7.1-cdh3u3/bin

(2) New directory mapdata

(3) Enter mapdata

(4) New construction

Hbasemaphivetest.q

(5) Execution

Hive -f hbasemaphivetest.q

Note: The columns should correspond to each other, and the types should match;

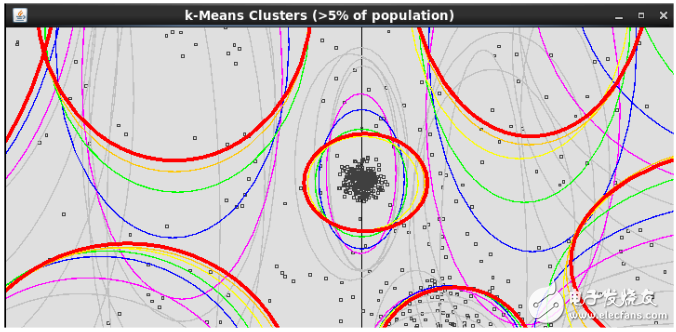

Twenty-two, mahout operation1, run example

(1) Import the data "synthetic_control.data" used by the instance, run hadoop fs -put synthetic_control.data /user/liuhx/testdata/ in the console

(2) Run the example program, run in the console, run longer, need to iterate 10 times

Hadoop jar mahout-examples-0.5-cdh3u3-job.jar org.apache.mahout.clustering.syntheticcontrol.kmeans.Job

2, run the results view, enter the command

Mahout vectordump --seqFile /user/liuhx/output/data/part-m-00000

3, graphical display, enter the following command

Hadoop jar mahout-examples-0.5-cdh3u3-job.jar org.apache.mahout.clustering.display.DisplayKMeans

1, install Eclipse

2. Import cdh3/hadoop-0.20.2-cdh3u3/src/contrib/eclipse-plugin project

3, modify the plugin.xml main change the configuration of the jar package in the runtime;

4, run Run As-"Eclipse Application

5. Configure map/reduce location in the running eclipse sdk. Configure the running environment of hadoop.

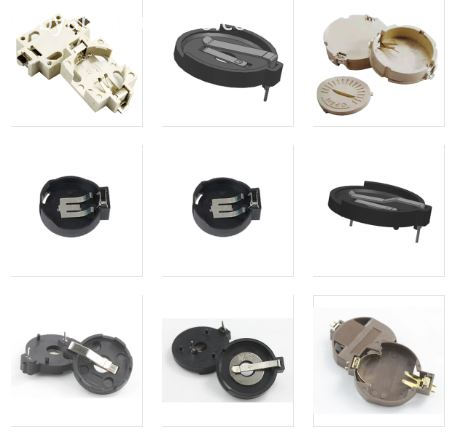

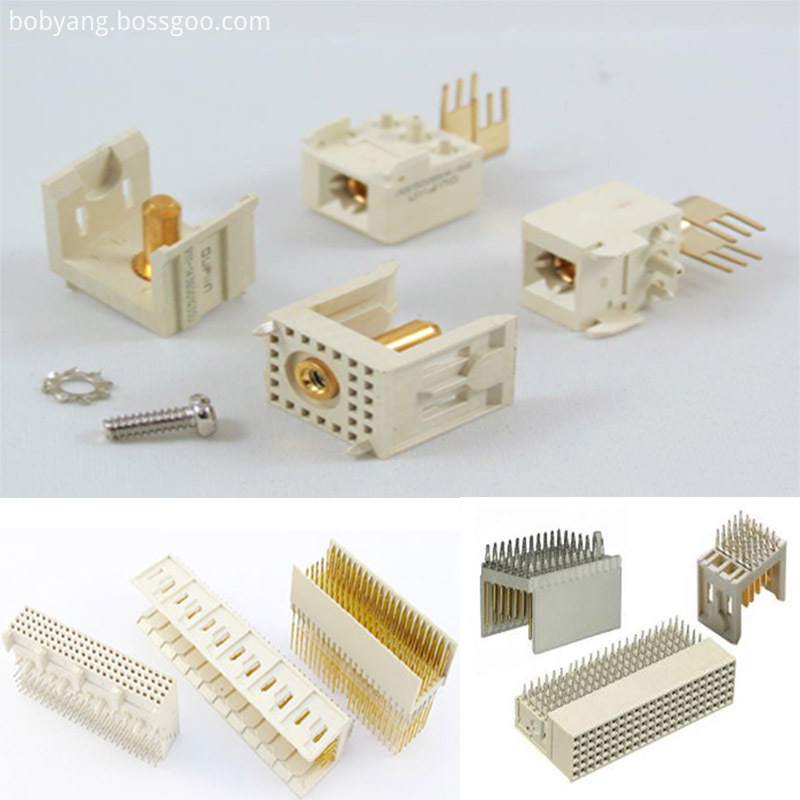

PCB Connectors: Backplane, Wire-to-Board, Board-to-Board Connectors

These types of connector systems are mounted or processed to a printed circuit board (PCB). There are a variety of PCB connectors and accessories best designed for specific uses. To name some, they include:Din41612 Connector,Board To Board Connectors,battery holders Clips Contacts,Future Bus Connectors,PLCC Connectors.

Din41612 Connector

Board To Board Connectors

Battery Holders Clips Contacts

Future Bus Connectors

PLCC Connectors

1.ANTENK manufactures a wide range of application specific board stacking PCB connectors which were designed and built to specific customer requirements. Our experienced staff has developed custom products in a variety of contact styles, pitches and stacking heights. Our designs range from new concepts to duplicating existing market products identically or with improvements. Many desigsn are produced using automated manufacturing processes to increase reliability and provide significant cost savings.

2.Our products are widely used in electronic equipments,such as monitors ,electronic instruments,computer motherboards,program-controlled switchboards,LED,digital cameras,MP4 players,a variety of removable storage disks,cordless telephones,walkie-talkies,mobile phones,digital home appliances and electronic toys,high-speed train,aviation,communication station,Military and so on

What is a PCB Connector?

Printed Circuit Board connectors are connection systems mounted on PCBs. Typically PCB Connectors are used to transfer signals or power from one PCB to another, or to or from the PCB from another source in the equipment build. They provide an easy method of Design for Manufacture, as the PCBs are not hard-wired to each other and can be assembled later in a production process.

PCB Connector orientations

The term PCB Connector refers to a basic multipin connection system, typically in a rectangular layout. A mating pair of PCB Connectors will either be for board-to-board or cable-to-board (wire-to-board). The board-to-board layouts can give a range of PCB connection orientations, all based on 90 degree increments:

Parallel or mezzanine – both connectors are vertical orientation;

90 Degree, Right Angle, Motherboard to Daughterboard – one connector is vertical, one horizontal;

180 Degree, Coplanar, Edge-to-Edge – both connectors are horizontal orientation.

Other names for PCB Connectors

PCB Connectors can be known as PCB Interconnect product. Specific terms are also used for the two sides of the connection. Male PCB Connectors are often referred to as Pin Headers, as they are simply rows of pins. Female PCB Connectors can be called Sockets, Receptacles, or even (somewhat confusingly) Header Receptacles.

Din41612 Connector,Board To Board Connectors,Battery Holders Clips Contacts,Future Bus Connectors,PLCC Connectors

ShenZhen Antenk Electronics Co,Ltd , https://www.antenkwire.com